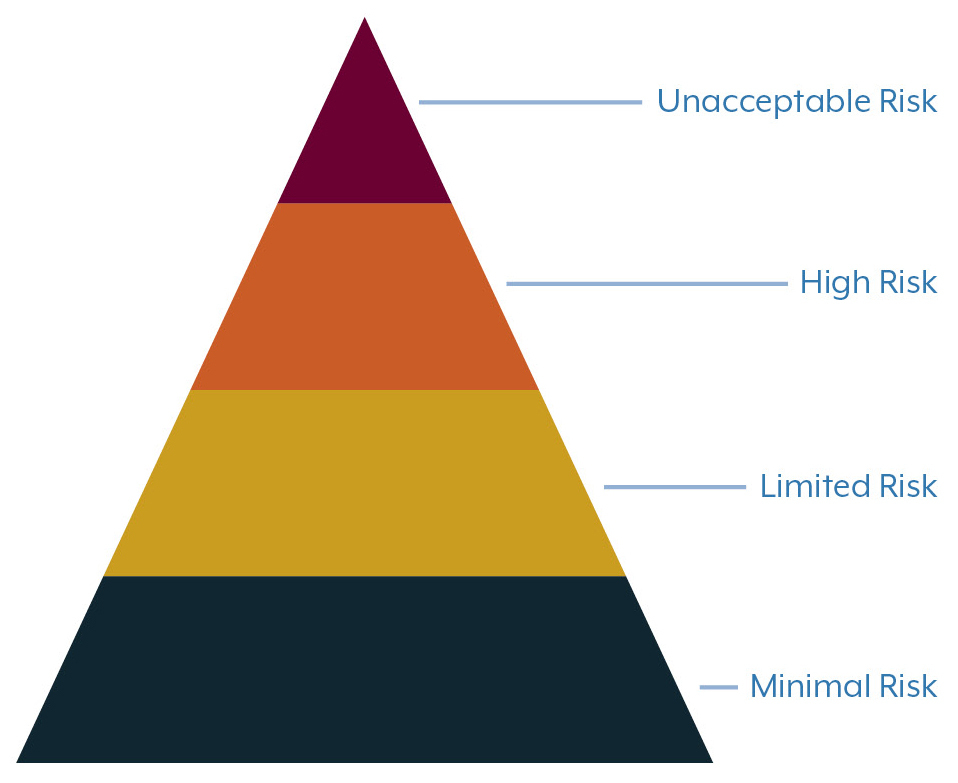

EU AI Act: Risk Categories

The EU AI Act is designed to regulate AI systems on a sliding scale of risk, with four risk categories:

- Unacceptable / prohibited risk

- High risk

- Limited risk

- Minimal or no risk

Your compliance obligation will be dictated by the layer into which your AI system falls.

EU AI Act: Unacceptable Risk

At the top of the pyramid, you have unacceptable risk which translates into the prohibition on AI systems falling into that category. Examples here include social scoring systems and predictive policing and, subject to some very narrow exceptions, sophisticated applications of AI that remotely monitor people in real time in public spaces.

EU AI Act: High Risk AI Systems

The next layer down is the high-risk AI systems. This a broader category and includes AI deployed in medical devices, as a safety component in toys or in the management of critical infrastructure like the supply of electricity, in employment recruitment tools, credit scoring applications and grade prediction technology in education.

High-risk AI systems will generate significant compliance challenges for providers of those systems, especiall those in the tech industry. There is a list of 7 detailed requirements which will likely cause many providers to significantly adjust their engineering processes and product procedures to ensure compliance.

These are:

- Risk management system

- Accuracy, robustness and cybersecurity

- Data and data governance

- Human oversight

- Transparency and provision of information to users

- Record keeping

- Technical Documentation

Many AI providers will never have encountered this type of product regulation before, and it will require significant investment in resources and capital.

The requirement to implement a quality management system to the standard required by EU product safety laws will likely be a significant challenge for those in the tech space.

Find out more about high-risk AI systems.

EU AI Act: Limited Risk AI Systems

AI systems falling into the Limited Risk category include deep fakes and chatbots. Their compliance obligations are lighter touch and focus on transparency. The user needs to be informed that they are dealing with an AI system unless it is obvious on the face of it.

Find out more about regulating chatbots and deepfakes under the EU AI Act.

EU AI Act: Minimal or No Risk Systems

AI systems not falling into the three categories mentioned will not be subject to compliance.

The focus of the AI Act from a technology providers perspective will home in on the high-risk and limited risk categories. Those falling into the high-risk category will have a lot of work to do to be compliant and should begin that journey now.

For more information on the EU AI Act or its impacts, contact a member of our dedicated Artificial Intelligence team.

The content of these articles are provided for information purposes only and does not constitute legal or other advice.